AI Guardrails for AI-assisted coding

Automated quality gates for AI coding

An AI assistant can generate code faster than ever but without guardrails, it also generates technical debt and other code quality issues. Set quality standards for AI code with CodeScene's automated process.

Empowering the world’s top engineering teams

AI meets quality

How it works

From AI-generated code to production — CodeScene's quality gates ensure only healthy, maintainable code enters your codebase.

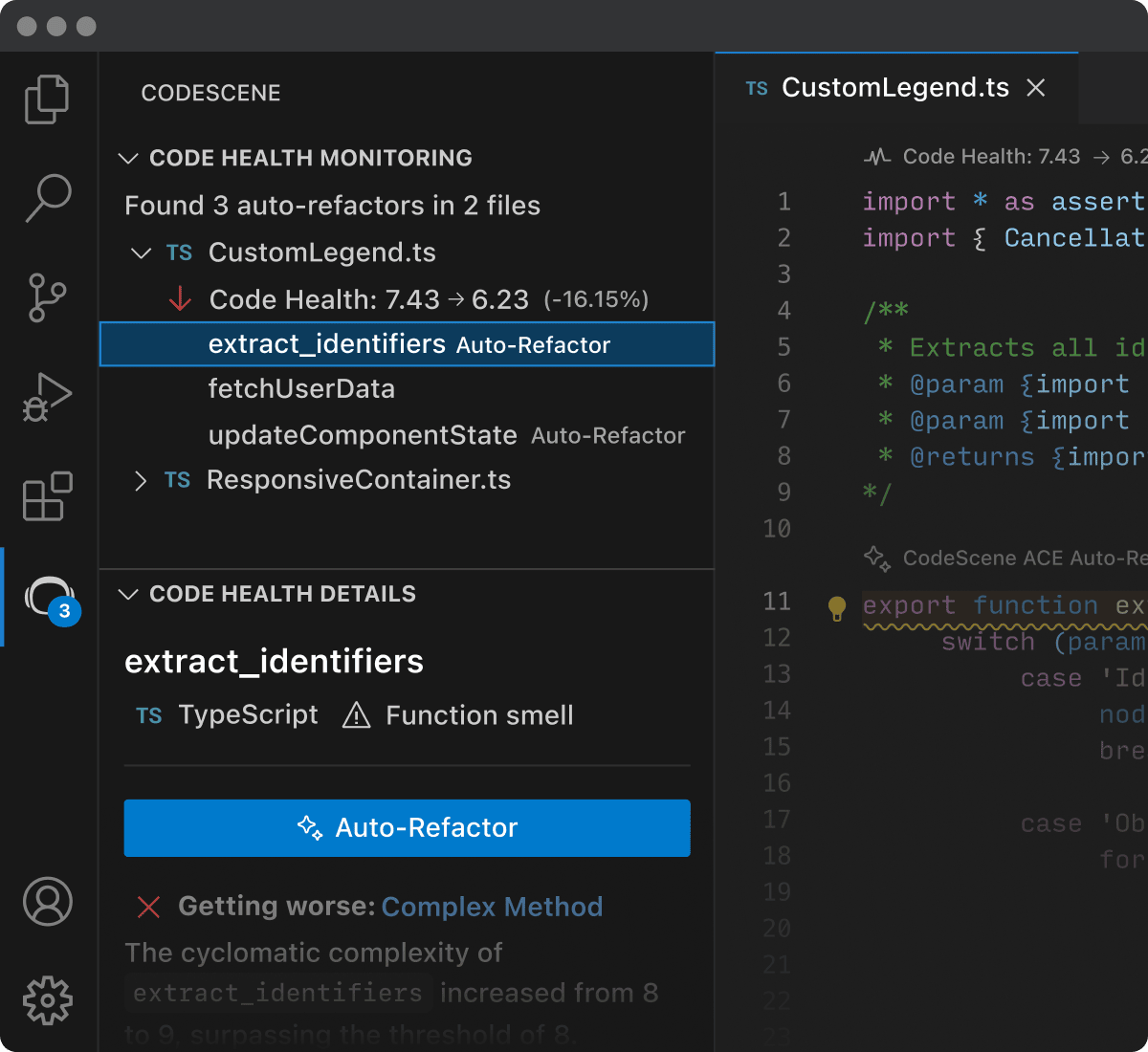

Real-time AI quality gates in your IDE

Quality-check generated code with real-time alerts for any introduced code smells. Automatically refactor with AI-powered suggestions.

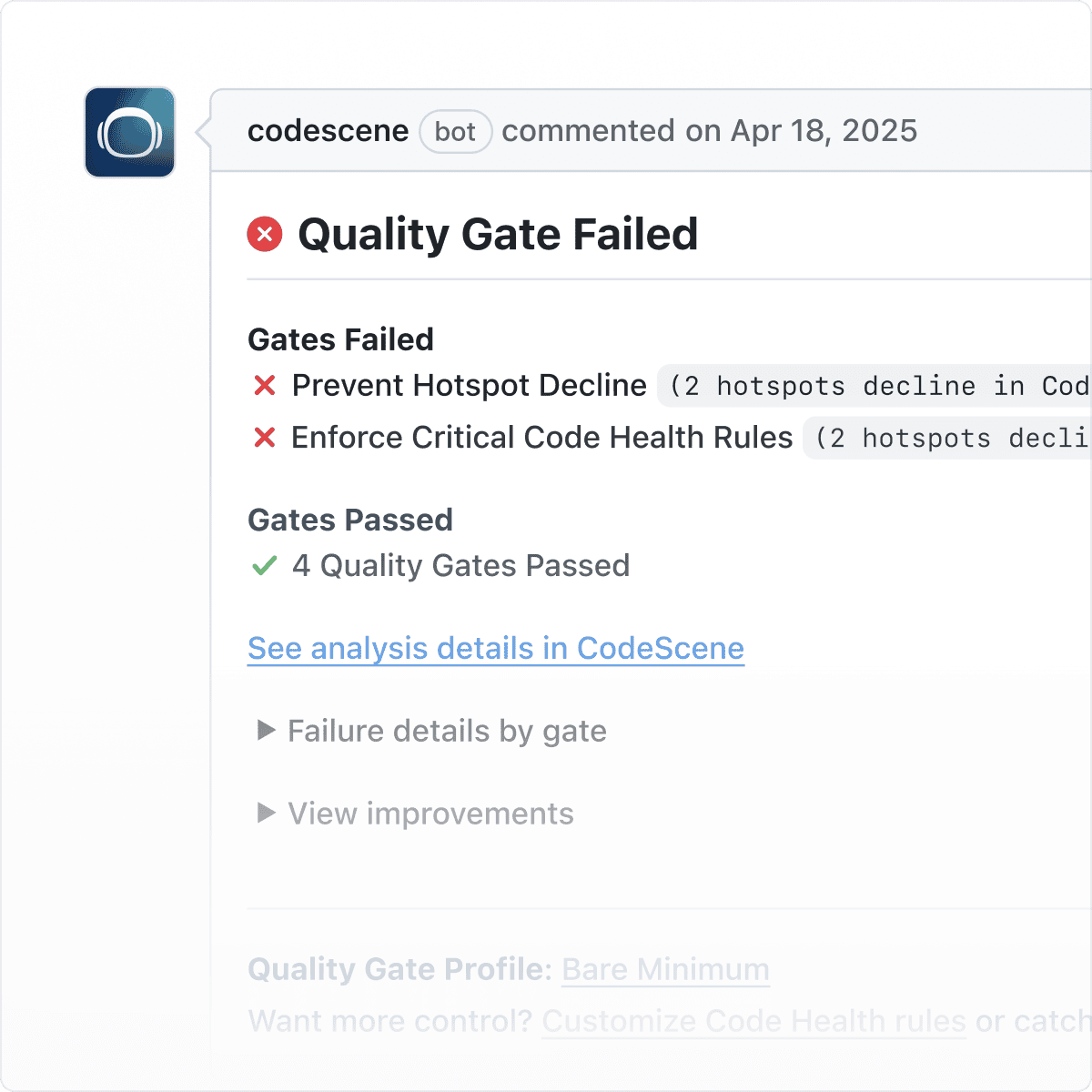

Automated Code Reviews

CodeHealth™ checks coach and act as quality gate in every PR/Merge request. Act on recommendations and alerts.

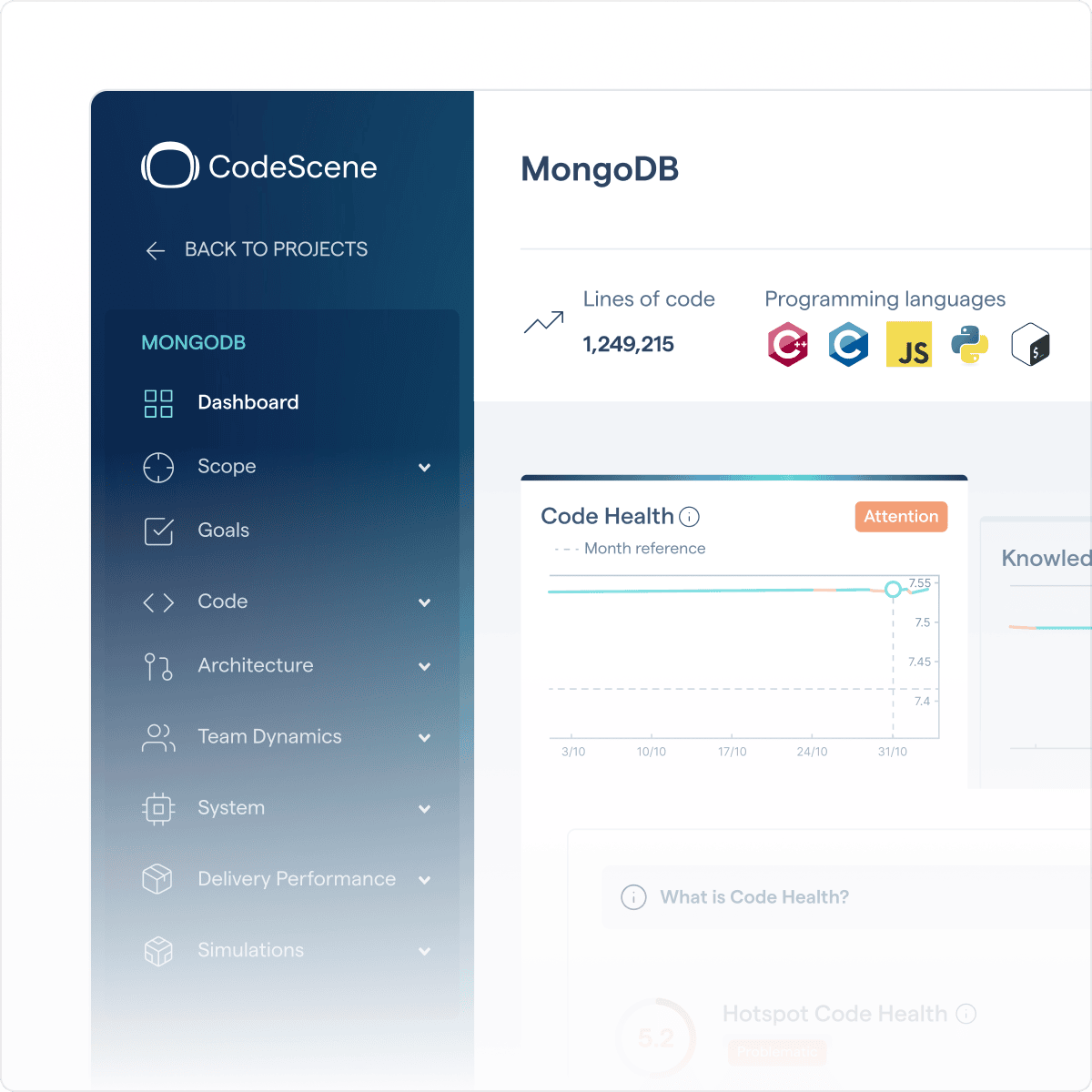

Monitor trends & take action

Track code quality trends with the CodeHealth™ dashboard and respond to any decline before it impacts delivery.

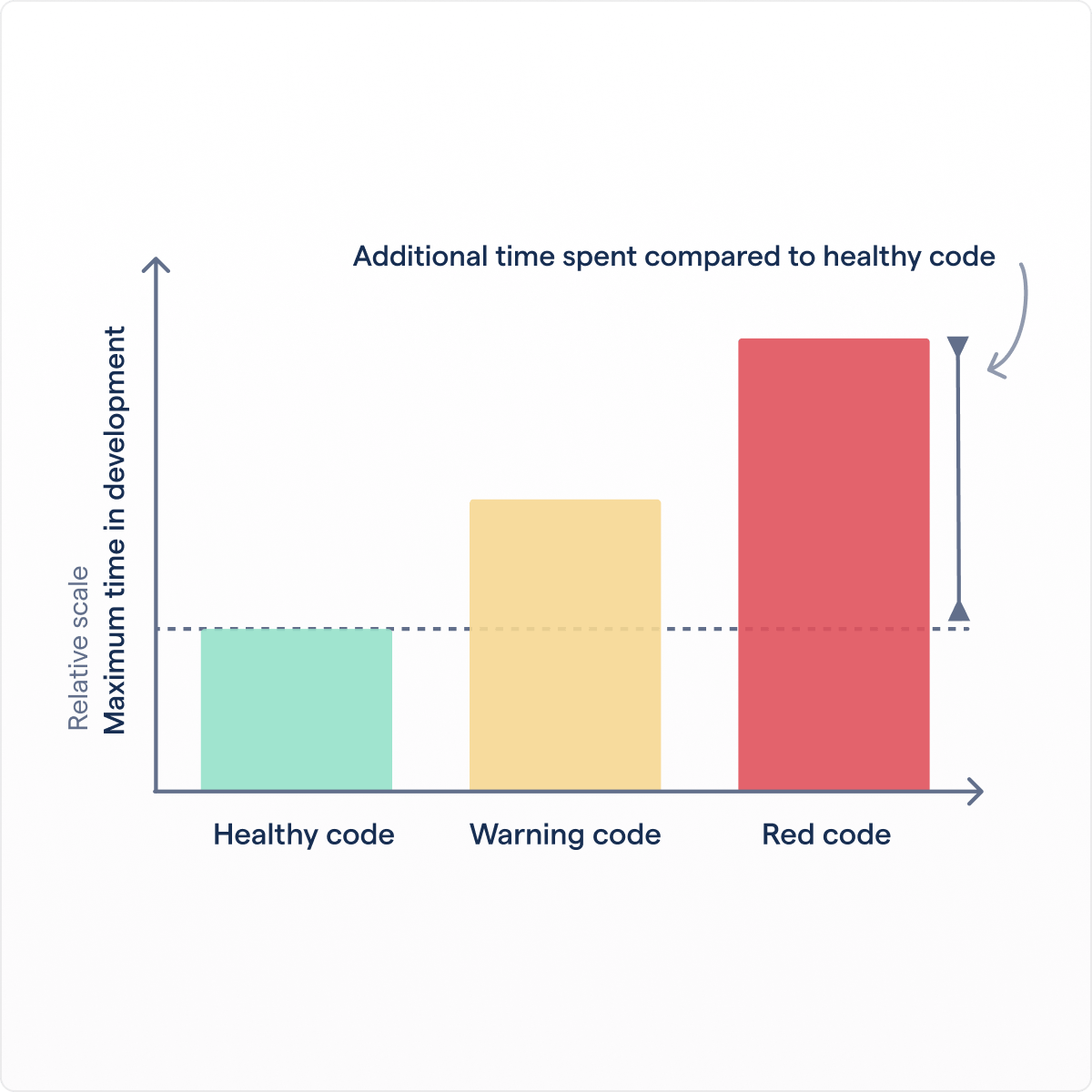

The hidden cost of AI-written code

The rise of technical debt from AI-written code

AI accelerates development—but without safeguards, it quietly builds up technical debt that slows you down later.

Technical debt drains developer productivity

40%

of a developer’s time is lost to technical debt — a number expected to rise as AI coding assistants accelerate code production without proper safeguards.

Rise of technical debt by 2026

50%

of tech leaders will be impacted by high technical debt by 2025, rising to 75% by 2026 due to AI’s rapid growth. Source: Forrester

How it works

AI code quality assurance - 3-step automated process

CodeScene gives you full visibility and control over your AI rollout - ensuring AI accelerates your team, not holds it back.

Step 1 - IDE extension

Real-time alerts when AI code fails quality checks

Real-time Code Health feedback and fixes, helping devs validate AI-assisted code that remains readable, scalable, and maintainable. Code smells are instantly detected. Works with all major languages and integrates seamlessly with Copilot, Cursor, and other AI assistants.

Developers can fix technical debt automatically with our AI-powered refactoring add-on.

Step 2 - Automated Code Reviews

Enforce code quality, set quality gates

Every line of code, AI-generated or handwritten, is reviewed. Teams define their code quality standards, and CodeScene automates enforcement via Pull/Merge Request reviews.

Code Health checks act as both a coach and a quality gate, preventing technical debt or other code quality issues from reaching production.

Step 3 - Interactive dashboards

Monitor AI-generated code, full visibility

Technical leaders and teams need full visibility into the codebase, code health trends, and the ability to react to critical changes. AI-generated code should meet the same quality standards as human-written code.

CodeHealth™ delivers the industry’s only validated technical debt metric, ensuring AI-driven development stays efficient and defect-free.

AI code auality, covered

Stay in control of AI-generated code at every level

From strategic oversight to real-time safeguards—empower leads, teams, and developers to manage technical debt and ensure quality across the AI-driven workflow.

For technical leads

Monitor AI-generated technical debt to ensure long-term code health and development speed.

For teams

Enforce AI code quality with automated reviews that prevent risks and maintain consistency.

For developers

Real-time technical debt prevention in the IDE to write cleaner, more maintainable code.

Industry-leading CodeHealth™ KPI

Metrics you can trust

Code quality is often subjective, but our IDE extension and code reviews rely on the proven CodeHealth™ metric to offer clear, research-based recommendations. This means cleaner code, shorter development cycles and fewer bugs.

Our research proves that healthy code has 15x fewer bugs

15x

Fewer bugs

With healthy code, you can double the speed of your feature delivery

2x

Faster feature delivery

With healthy code, it's nine times more likely that features will be delivered on time

9x

More likely to deliver on time

Guardrails for AI code

Remove AI-generated technical debt

Get real-time code health monitoring for your already implemented AI-coding assistants to prevent any new technical debt entering your codebase. Auto-refactor directly in your IDE.

Faster time to market, improved quallity

Key benefits

You can have it all. Implement guardrails to ensure AI-generated code quality while still benefiting from AI coding assistants.

Validated Code Health KPIs

Set a baseline for your AI-generated code. Our Code Health metric has a proven link to speed and quality, validated through peer-reviewed research.

High quality

Ensure AI-generated code meets same quality standards as human-written code before releasing to production.

Prevent technical debt

Any technical debt or code quality issue is automatically detected by CodeScene's quality gates. Review and apply suggested fixes.

Monitor critical code

Monitor any changes in critical areas of your codebase and if complexity of your code suddenly increases.

Maximize the value of your AI-generated code

Guarantee code quality, fast delivery, and maintainability right from the start.